Philip Bump, Jesse Watters, and the Perennial Debate Over How Polls Work

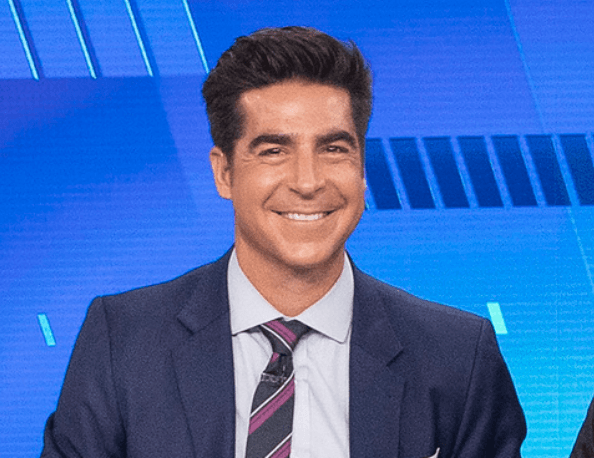

Over at the Washington Post, Philip Bump has a snarky piece titled “Jesse Watters doesn’t know how polls work.” The theme of it is, well, that Fox News host Jesse Watters doesn’t know how polls work. It’s not bad, but neither is it all good. Regardless, it gives a useful interlocutor for exploring a bit of how polls actually work and gives us an opportunity to explore a perennial debate over what to do about polls’ internals.

Watters’ argument

Basically, Watters engaged in a venerable practice known as poll unskewing. Poll unskewing happens when someone looks at a poll’s internals, sees something they don’t like, and “adjusts” the poll back to reality. It’s named after a(n in)famous website from the 2012 election, whose proprietor concluded that polls were badly understating Mitt Romney’s chances and adjusted them accordingly. It … did not end well for him.

To explain a bit deeper: Good pollsters routinely publish what are known as “crosstabs.” These crosstabs take the larger sample and describe how subgroups may be voting. So, a poll may have Trump behind Harris by two points overall; its internals, however, might show her leading Trump by, say, 50 points among black voters, or trailing by five among whites.

This provides a target-rich environment for partisans who have a hard time processing the possibility that their candidate is behind. It really is a “both sides” phenomenon. Polls showing former president Donald Trump performing unusually well for a Republican among black and Hispanic voters have been a regular target for the left this cycle. On the right? The more common refrain is that polls are overstating the number of Democrats.

That was Watters’ approach. He states “[The media’s] juicing the polls! I just found out, this country identifies R plus-2. And all the polls we’ve seen with Kamala doing so well, their samples? R plus-7! R plus-8! R plus-4! Trump is going to kill her!”

Bump’s response

As Bump correctly points out, Watters misspeaks here. He really meant to say that the polls were D plus-7 or so. In other words, when you look at the crosstabs, they show a lot more Democrats in the sample than Republicans. Watters contrasts this with a poll from Gallup suggesting that there are, in fact, 2% more Republicans than Democrats in the country right now and concludes that, among the actual folks who will vote, Trump must be blowing Harris out.

Bump’s counterargument has three interrelated prongs. It starts off well enough, but then veers dangerously close to validating Watters’ argument inadvertently. The first argument is that if you look at Gallup’s numbers closely, independents are the most numerous party (of sorts) in America. The second is that the political analysis firm L2 estimates that Democrats are actually +8 in America. The third is that pollsters often weight their polls to correct for an undersampling of Democrats or Republicans.

It’s true that “independent” is the most common political self-identification in modern America. The problem is that most independents are actually closet partisans. That is to say, though they may claim independence, when you press them, it turns out that they regularly favor one party or another. Indeed, when Gallup does press independents on their political leanings, the Republican edge grows to R+6.

Bump then turns to modeled data from L2, suggesting that Democrats are far more numerous. I will urge the reader to hold that thought until later for now, but we should note that this is an estimate. States are roughly split on whether they allow registration by party identification. Nineteen states, (including Michigan, Ohio, and Wisconsin) don’t have party registration at all. We should also note that actual registration is a tricky indicator; Democrats have longstanding registration edges in North Carolina and Florida, for example, notwithstanding the fact that Republicans routinely win those states.

Bump’s final point is that pollsters will often weight their samples. As Bump puts it, “Let’s say that you ask 100 Democrats and 80 Republicans how they plan to vote in an election, with 90 percent of each group picking their party’s candidate and 10 percent selecting the other party’s. By itself, that’s a 54 percent to 46 percent margin in favor of the Democrat. If you think, though, that the electorate will be 50-50? You can simply treat each Democrat response as eight-tenths of a response – what pollsters call ‘weighting.’ The result is a 50-50 race.”

Why Watters is probably wrong

This, however, is exactly Watters’ point! There are areas where weighting is not terribly controversial. We know, for example, from the decennial census that around 12% of Americans are non-Hispanic black. If you receive a poll sample back that is only 8% black overall, you can weight black responses more to give yourself a sample that truly looks like the American population.

Weighting to party registration, though, is different – and notoriously controversial. It is something we have fought over for my entire 20-year career covering elections. Race is (mostly) a concept that doesn’t change throughout a person’s life. Partisanship, however, is something that can change from day to day.

Not only that, it can change depending on the question. As the above suggests, you might get different answers to the question if you ask what party individuals identify with (R+2, according to Gallup), what party they identify with after pushing independents (R+6 according to Gallup) or what party they are registered with (D+8, as estimated by L2). You will get a different answer if you ask people how they usually vote. Thus, pollsters with identical samples might get different reported partisanship results depending on question wording. Also, all of these answers are based on samples, which means that they themselves have error margins. In other words, unlike race, where we have pretty stable population counts, we lack a good lodestone for weighting party identification.

What this does is open a pollster up to a possibility where she herself is weighting to an estimated value. Worse, she might end up weighting to a value that “sounds right.” That is a dangerous thing to do, since whether something “sounds right” ultimately involves smuggling in one’s prior views of things. This is particularly dangerous given that party identification correlates heavily with election outcomes. By weighting to partisanship, a pollster risks “fixing” the results of the poll. Again, that’s essentially Watters’ argument: that pollsters are juicing the polls to make things look better for Democrats.

So where does this leave us? The best arguments against poll unskewing are missing here. In fact, one ought not take crosstabs that seriously. The first reason is that crosstab samples are frequently small. If a poll sample is 500 people, then your estimate of black voting patterns will probably be based upon 60 or so respondents; this sample will have a huge error margin. The second is that while “trust the experts” is a highly overrated heuristic, there’s a good chance that your average pollster really does know more about American demographics than you do. Pollsters will frequently get it wrong. But so will you.

The third is simply this: We “do the social science” for a reason. If we are going to reject polls showing Trump earning, say, 20% of the black vote outright, then why do we conduct the polls in the first place? That doesn’t mean that common sense must be left at the curb. It just means that when we draw on social science, all the dangers associated with declaring that an outcome “doesn’t sound right” come rushing in. Likewise, elections with electorates heavily favoring Democrats have occurred. It’s not impossible that this will be one of them.

Where Watters may be right

With all that said, there is a sound social science concept of which we should be aware. In fact, it is particularly dangerous right now. It is known as partisan non-response bias. The idea is this: When events favor one political side or the other, partisans become more (or less) likely to take a poll.

The intuition is this: After Biden’s disastrous June debate, Democrats really didn’t want to talk about the election. Republicans on the other hand, wanted to talk about nothing else. It was probably the best time to be a Republican in a presidential election since, well, Mitt Romney beat Barack Obama in the first presidential debate. Some of Trump’s poll lead in July probably was due to a newfound Republican eagerness to respond to polls.

At the same time, Democrats are overwhelmingly engaged right now. They have reason to believe they just avoided a near-death scenario and potential wipeout. They have a new presidential nominee, about whom they are overwhelmingly excited, and they like the vice presidential selection. They would love nothing more than to talk to you, or a pollster, about the 2024 election.

This really does put a bit of an asterisk on a poll showing a D+7 advantage right now. It is reasonable to think that at least part of that edge could be due to an asymmetry in enthusiasm. What does this mean for people hoping to interpret polls objectively? That is a really good question. It is almost definitionally difficult to detect partisan non-response bias, since you can’t talk to people who didn’t respond to polls. While a surge in party identification can be an indicator, it could also be a manifestation of enthusiasm, as previously self-identifying independents decide the Democratic Party is for them after all. Even worse, if that enthusiasm gap carries over to Election Day, it will probably manifest in actual poll results.

In the end, the answer is this: If you’re worrying about this type of thing, remember what the polls really tell you. They’re snapshots in time. If this really is partisan non-response bias, it will cool down by September (at least until Trump’s sentencing in New York potentially upends things). Most importantly, we don’t conduct elections by poll. We conduct them by votes, and that’s the tally that ultimately matters.

2024 Key Senate Races

Get caught up on the most important polling for the most consequential races of 2024.